The increase in discrimination patterns and data colonialism are among the negative aspects of this technology highlighted by experts. The artificial intelligence (AI) revolution has a dark side. This is the warning of digital rights advocates and activists gathered in Berlin for the latest re:publica technology conference, which ended this Wednesday (29/05).

“New patterns of discrimination are emerging thanks to AI and algorithms,” said Ferda Ataman, Germany’s independent federal anti-discrimination commissioner. She warned that artificial intelligence, if left unchecked, could exacerbate discrimination by perpetuating existing prejudices.

The recent growth of AI technology has led to remarkable advances, from innovative methods of diagnosing and treating cancer to new systems that allow anyone, regardless of technical knowledge, to create images and videos from scratch.

However, several experts warned at the conference that the downsides of technology are less visible and that vulnerable communities on the margins of society and in the Global South will be the first to suffer the consequences.

AI Monitoring

The way artificial intelligence is being used to take surveillance to unprecedented levels illustrates this situation well, according to Antonella Napolitano, a technology policy analyst.

In a report published last year by the human rights organization EuroMed Rights, Napolitano argued that, as part of a broader trend to “outsource” control of its external borders, the European Union is funding companies that are testing new AI-based surveillance tools on migrants in North Africa, whose privacy is not as protected as that of EU citizens.

“It’s easier for companies to test different technologies on these people without facing consequences,” Napolitano told DW. “But the consequences are real,” he said, adding that surveillance through artificial intelligence can push migrants onto more dangerous migration routes.

“Data Colonialism”

Reports like this have led some digital rights advocates to draw parallels with the history of 15th-century colonialism, when European powers began exploiting large parts of the world.

Another aspect of this new “data colonialism” is that many Western companies have gone to the Global South to collect data, says Mercy Mutemi, a Kenyan digital rights lawyer. “History is repeating itself,” she said.

Most of today’s cutting-edge AI systems rely on massive amounts of data. To meet this demand, Western companies are massively mining data on individuals across Africa, often without consent and with little or no benefit in return, Mutemi said. The lawyer is involved in a dozen ongoing cases that challenge the role of Western tech companies in her home country.

This phenomenon is likely to intensify in the coming years as demand for data in AI technology continues to grow, he added.

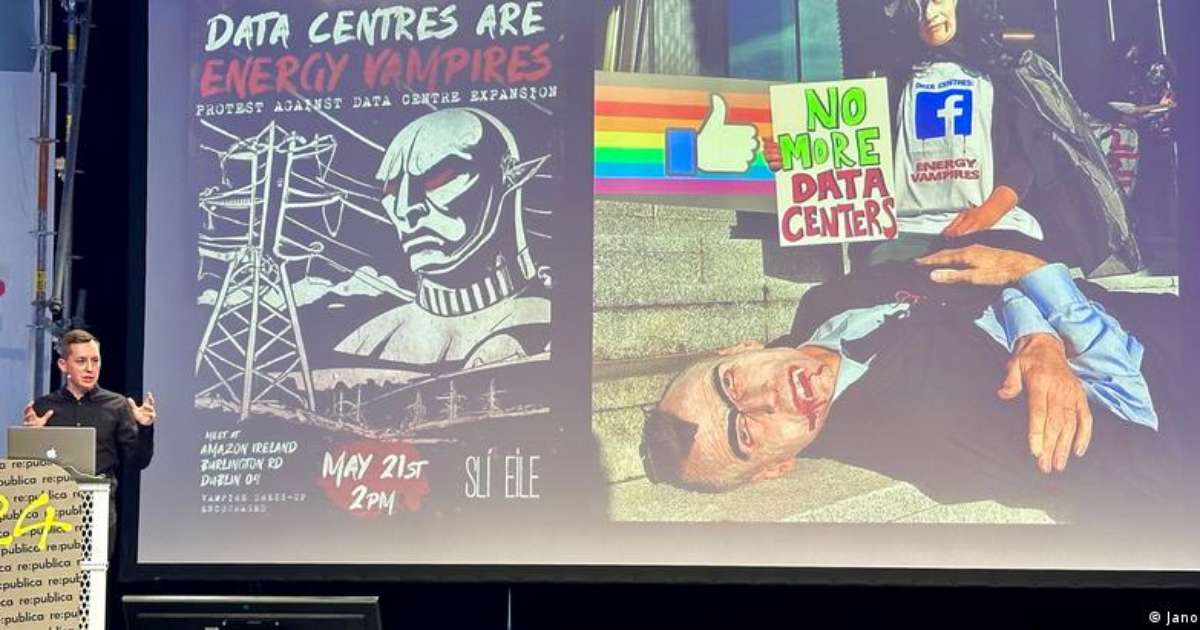

carbon footprint

The rapid pace of AI development also raises concerns about its environmental impact. Training and running complex AI models requires huge processing capacity in data centers, which means high energy consumption, water consumption, and greenhouse gas emissions.

Currently, data centers are already responsible for more than 2% of global electricity consumption, according to estimates from the International Energy Agency.

The development of energy-intensive AI tools will further increase energy consumption, according to Canadian author and technology critic Paris Marx.

Marx warned of an “AI-powered data center boom” driven by the commercial interests of a few powerful tech companies. “There’s been a huge investment in building data centers around the world,” Marx said at the conference.

“Pop culture fan. Coffee expert. Bacon nerd. Infuriatingly humble communicator. Friendly gamer.”