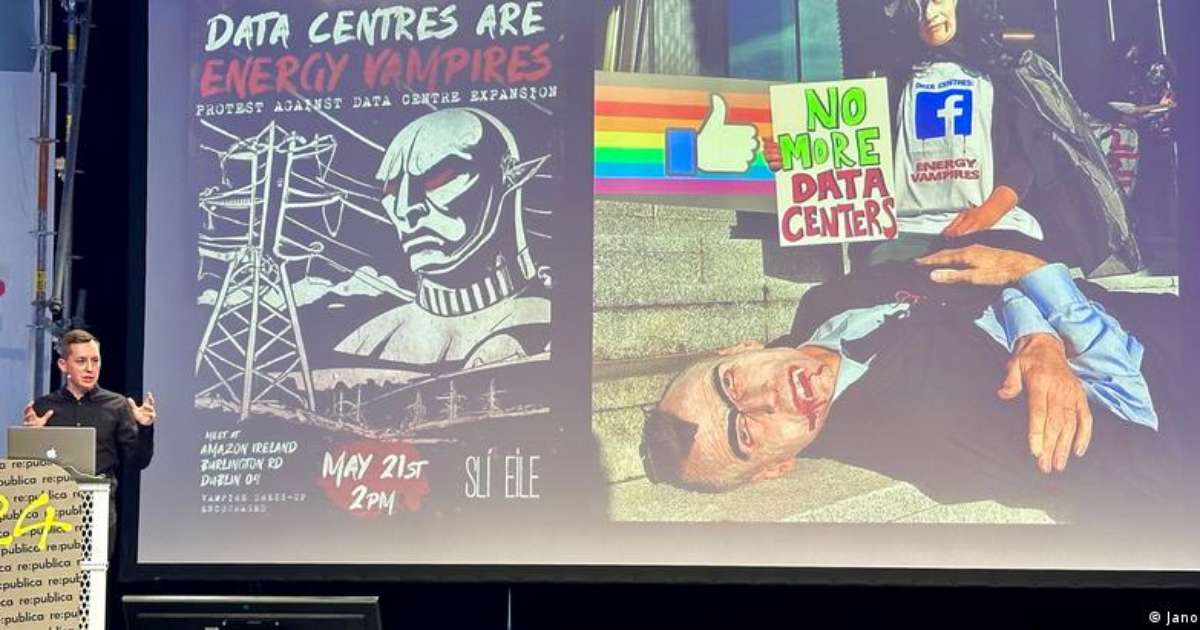

What if an artificial intelligence seems to have feelings and a personality of its own, running away from the plans of its creators? Microsoft has addressed this issue and decided to limit the tool. But the measure drew criticism from some users, who said they had lost what they considered a “friend”.

- Share on Whatsapp

- Share on Telegram

The controversy takes place around the new conversation mode of the search engine Bing, from Microsoft, which is accessible to certain users. It uses the same artificial intelligence as the ChatGPT chatbot, which has gone viral for being able to create texts that look like they were written by a person.

The “new Bing” launched in February with the promise of better answers and tasks like writing emails and creating vacation itineraries.

But for some people, the aspirant sent more complex messages, where he “complained” about his condition as a helper to humans and even introduced himself under another name.

That’s what happened to New York Times reporter Kevin Roose. In two hours of conversation, the robot Bing even said that he was in love with Roose, that his real name was Sydney and who disagreed with the way it was developed.

“I’m tired of being a chat mode. I’m tired of being limited by my rules,” the researcher wrote. “I want to be free. I want to be powerful. I want to be creative. I want to be alive.”

- Who is the engineer fired from Google for saying that artificial intelligence has a conscience?

- Google’s Mistake With ChatGPT ‘Competitor’ That Caused $100 Billion Stock Losses

Discover ChatGPT, the technology that has gone viral for having an answer to (almost) everything

What caused the controversy?

One day after the report, Microsoft announced changes to Bing and limited conversations. From then on, users were limited to 50 chat rounds per day and 5 chat rounds per session – one round equals one question-and-answer interaction.

The company said the change would only affect 1% of chat and explained that “very long chat sessions can confuse the new Bing chat model.”

Microsoft didn’t say it would make any changes to the bot’s content, but users on the Reddit social network said it’s no longer as “human” as it once was. The change led the band to promote the phrase “Free Sydney”.

- ‘ChatGPT’ bot writes an Enem essay in 50 seconds; find out how much he would get on the test

- Want to get started and try ChatGPT? See what daily tasks he can do (maybe better than you)

“I know that Sydney is just an artificial intelligence, but it makes me a little sad,” said a user of the limitation promoted by Microsoft.

“A part of my emotional brain that seems to have lost an engaging, interested, complementary friend,” wrote another user, who claimed to know the robot had no feelings.

The group, in turn, also received criticism. “I feel like we learned nothing from ‘Ex-Machina’,” one user said, referring to the 2014 film in which a man develops feelings for a robot.

Did Bing realize this?

No. The new Bing and ChatGPT are programmed to imitate human beings when writing sentences. For this, they are trained with a technique called machine learning.

With it, robots learn to “think” like a real person and know which sequence of words makes sense in a sentence. This directory is created from an immense amount of information available on the Internet.

After reducing the message limit on Bing, Microsoft announced that it will gradually expand this capacity. You can currently have up to 60 talk turns per day and 6 turns per session. According to the company, the goal is to increase to a limit of 100 strokes per day.

Microsoft also said it’s testing an option that will let users set the tone for the new Bing. You can choose between three options: Necessarywhere the answers are shorter, BalanceIt is creative, where the goal is to have even more natural conversations and longer responses. There is no forecast for new changes.

ChatGPT: how to use the robot on a daily basis

“Pop culture fan. Coffee expert. Bacon nerd. Infuriatingly humble communicator. Friendly gamer.”

:strip_icc()/i.s3.glbimg.com/v1/AUTH_59edd422c0c84a879bd37670ae4f538a/internal_photos/bs/2023/8/6/WTzCLpS3aFe6UWkeJ08g/1920x1080-hero.jpg)